Hyperparameter Optimisation

Hyperparameter optimization fine-tunes machine learning models by systematically searching for the best combination of hyperparameters to enhance performance.

We provide built-in support for hyperparameter optimization using predefined default settings, derived through extensive experimentation. Additionally, users can manually fine-tune model hyperparameters.

We offer three hyperparameter optimization algorithms, detailed below.

Select Hyperparameter Optimisation in evoML

-

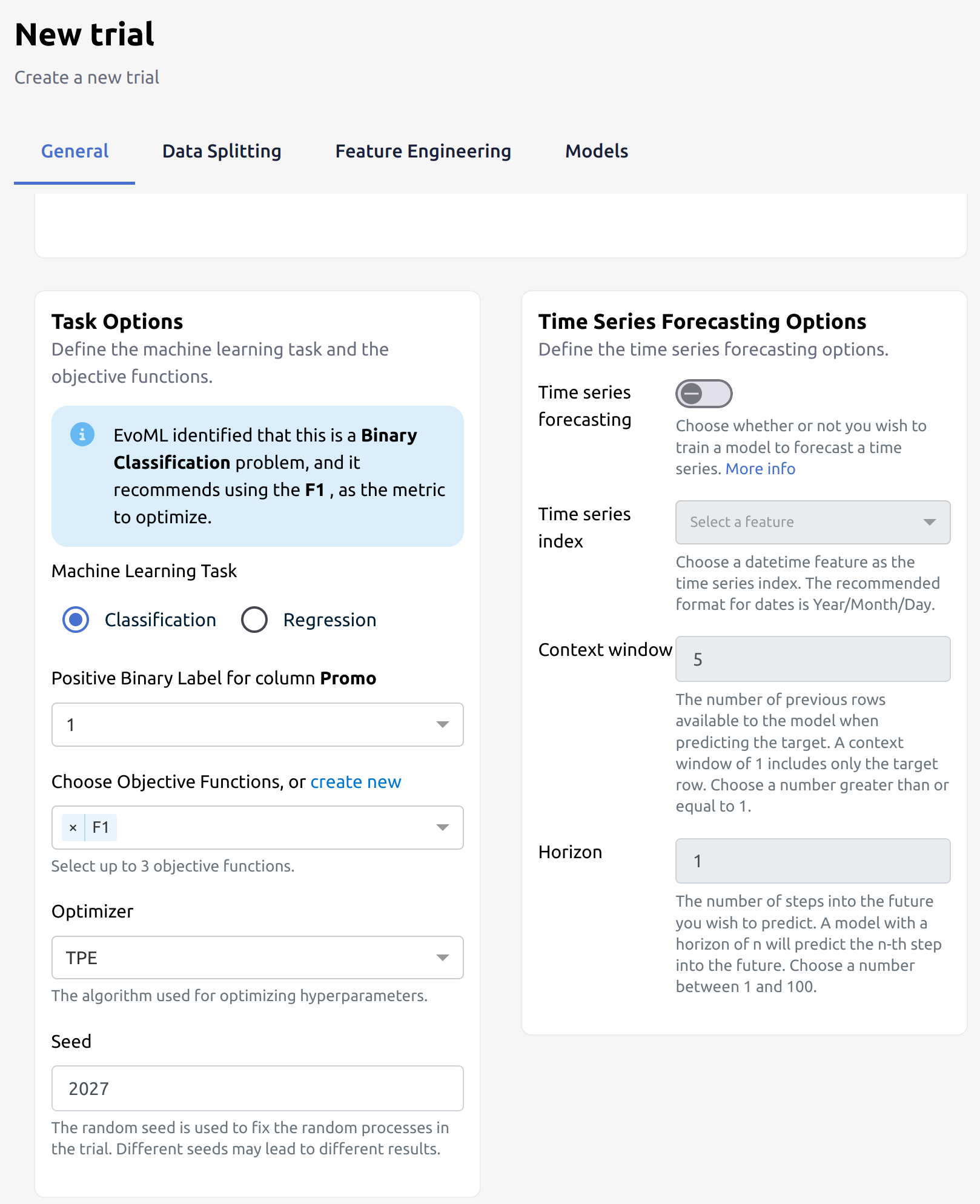

Create a New Trial

-

Under Task Options choose Optimizer. The default hyperparameter optimisation strategy applied is Tree-Structured Parzen Estimator.

Hyperparameter Optimisation algorithms

1. Tree-Structured Parzen Estimator (TPE)

TPE is a Bayesian optimisation algorithm for global optimisation problems that models the search space as a tree-structured probability density function and iteratively estimates the optimal region in order to improve solution accuracy.

2. Non-Dominated Sorting Genetic Algorithm II (NSGA-II)

NSGA-II is an evolutionary algorithm that simultaneously optimises multiple objectives by selecting, mutating and recombining candidates based on their performance and diversity, driving the search toward the best solution.

3. Random

Random search is an optimisation algorithm that randomly samples the search space making it simple and effective in high-dimensional spaces. However, it may be less efficient than other optimisation methods since it does not utilise any information about the quality of previous solutions to guide its search.